Facebook disappeared – A bolt

from the blue

For a brief while, everyone wondered if Facebook might vanish too. Yes, indeed! Everyone is becoming dependent on social media sites like Facebook and Instagram in the current digital era. Life halted the instant Facebook ceased functioning. What is this report about Facebook "vanishing"? Let's examine the Facebook disappearance narrative in this blog post.

Facebook had severe difficulties on October 5th as a result of a technical error. The app and all Facebook services were unavailable due to the extreme downtime that started at 11:40 a.m. ET, which left millions of users searching elsewhere for an "interaction dopamine rush." The websites only returned to operation at approximately 6 p.m. that same day. Although the outage only lasted a few hours, Facebook lost $50 billion in only the first two of those hours.

There was an error named "Facebook DNS lookup returning SERVFAIL" at 15:51 UTC on October 5th, and everyone was concerned that our DNS resolver 1.1.1.1 was malfunctioning. Albeit, as Facebook officials prepared to update their official page, they became aware of something more alarming.

Many social media users took to Twitter to voice their complaints as a result of this problem. Social networking confirmed what their engineers had found the moment it burst into flames. All of Facebook's services, including Instagram and WhatsApp, were unavailable! Their infrastructure IP addresses were inaccessible, and their DNS domains were no longer resolving.

It appeared as though someone had abruptly "ripped the cables" connecting their data centers to the Internet. Although it wasn't a DNS issue per se, it was the initial indication of a more extensive Facebook outage. When Facebook went down for the first time, it lasted longer than six hours.

Intuitive, fast, and reliable automatic columnar ANSI SQL pipelines

So, what went wrong?

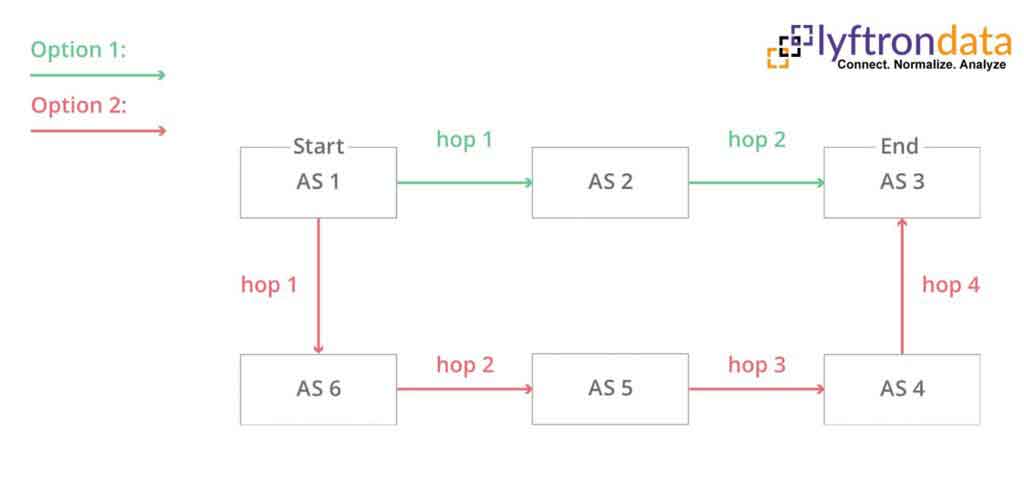

Facebook shares information on what happened internally. They observed the DNS and BGP problems from the outside, but an internal core configuration modification was the initial cause of the issue. As a result, Facebook and other websites vanished, and Facebook employees struggled to get connectivity back.

BGP

Border Gateway Protocol, or BGP, is a mechanism that facilitates routing information exchange between autonomous systems (AS) on the internet. Huge, dynamic lists of potential routes for sending network packets to their final destinations are stored in the large routers that power the internet. Without BGP, the Internet wouldn't exist and the Internet gateways couldn't operate.

The problem was brought on by "configuration modifications on the underlying routers that manage network traffic across data centers," according to Facebook's engineering replacements. As a result, the services were suspended due to a "ripple effect on the way Facebook's data centers communicated."

Considering how long the outage lasted, "not quickly" is perhaps the best response. Facebook wanted to make sure that the content it was promoting was appropriate and that it had gained widespread traction online. Stated differently, they made an effort to ensure that their maps were accurate and visible to all.

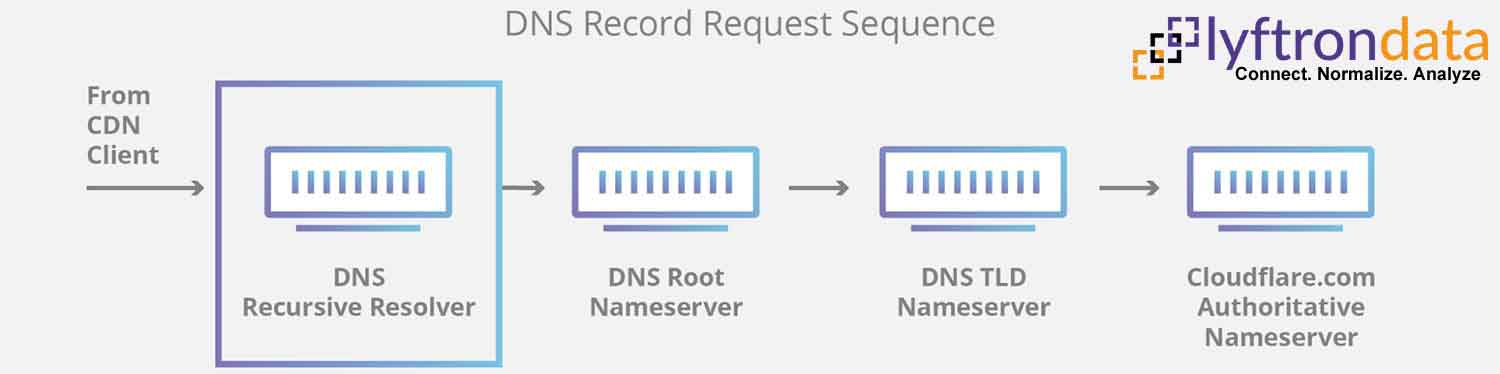

At 15:58 UTC, they found out that Facebook had stopped broadcasting routes to their DNS prefixes. It suggested that Facebook's DNS servers were down, at the absolute least.

Consequently, the IP address issues with facebook.com could no longer be resolved by Cloudflare's 1.1.1.1 DNS resolver. Facebook records every BGP notice and modification in the global network. They can see where traffic is going and how the internet is connected thanks to the data that was gathered.

A minute after Facebook's DNS servers went down, Cloudflare developers were at a conference, wondering why 1.1.1.1 was unable to resolve facebook.com and fearing something was wrong with their servers.

<

How Lyftrondata helps to transform your Snowflake journey

The impacting DNS

Globally, DNS resolvers are no longer able to process domain names. It happens as a result of the routing mechanism that DNS, like other Internet systems, uses. The DNS resolver, which transforms names into IP addresses, looks through its cache and starts processing requests when someone types https://facebook.com into their browser.

If that fails, it tries to retrieve the data from the domain servers maintained by the domain's governing body. If the nameservers are down or do not respond for any other reason, a SERVFAIL returns and the browser alerts the user.

Facebook ceased broadcasting its DNS prefix routes via BGP, so DNS resolvers were unable to link to nameservers.

Consequently, well-known public DNS resolvers like 8.8.8.8, 1.1.1.1, and others started to generate (and store) SERVFAIL responses. This is where application logic and human behavior take control, having a second, huge influence. An abundance of additional DNS traffic is the outcome.

It happened in segments since programs started attempting again right away after misinterpreting an error as a response. Another reason is that end users will begin to reload websites or violently close and restart apps if they receive an error.

Due to the massive volume of requests that Facebook and other social networking sites execute, DNS resolvers across the globe are suddenly handling several times as many queries, which could cause slowness and expiration issues on various platforms. On the other hand, 1.1.1.1 was made to be Free, Confidential, Quick, and Scalable; users had little interruption from it.

People wanted to know more about what was going on and began searching for alternatives. When Facebook went down, users noticed a spike in DNS queries to Signal, Twitter, and other messaging and social media platforms.

The sudden occurrences serve as a brief reminder that there are billions of algorithms and devices on the internet, making it a massive and interconnected system. It functions for about five billion users globally because of collaboration, standardization, and trust between entities.

In summary

Following a hectic workday, Facebook's connection saw fresh BGP movement at 21:00 UTC, which peaked at 21:17 UTC. At 15:50 UTC, the DNS server "facebook.com" on Cloudflare's DNS resolver 1.1.1.1 stopped providing service; it then started up again at 21:20 UTC. Facebook eventually made a comeback to the worldwide internet, and DNS began to function.

Are you unsure about the best option for setting up your data infrastructure?